45 soft labels deep learning

Understanding Dice Loss for Crisp Boundary Detection - Medium Therefore, the range of DSC is between 0 and 1, the larger the better. Thus we can use 1-DSC as Dice loss to maximize the overlap between two sets. In boundary detection tasks, the ground truth ... Softmax Classifiers Explained - PyImageSearch Understanding Multinomial Logistic Regression and Softmax Classifiers. The Softmax classifier is a generalization of the binary form of Logistic Regression. Just like in hinge loss or squared hinge loss, our mapping function f is defined such that it takes an input set of data x and maps them to the output class labels via a simple (linear) dot ...

Label-Free Quantification You Can Count On: A Deep Learning ... - Olympus Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Soft labels deep learning

Deep learning enables accurate clustering with batch effect ... May 11, 2020 · Single-cell RNA sequencing (scRNA-seq) can characterize cell types and states through unsupervised clustering, but the ever increasing number of cells and batch effect impose computational challenges. An Overview of Multi-Task Learning for Deep Learning May 29, 2017 · So far, we have focused on theoretical motivations for MTL. To make the ideas of MTL more concrete, we will now look at the two most commonly used ways to perform multi-task learning in deep neural networks. In the context of Deep Learning, multi-task learning is typically done with either hard or soft parameter sharing of hidden layers. How to map softMax output to labels in MXNet - Stack Overflow 1. In Deep learning the predictions are often encoded using one hot vector. I am using MXNet for creating a simple Neural Network which classifies images of animals as cats,dogs,horses etc. When I call the Predict method of MXNet it returns me a softmax output. Now, how do I determine that the index of the entry in the softmax output ...

Soft labels deep learning. Learning to Purify Noisy Labels via Meta Soft Label Corrector By viewing the label correction procedure as a meta-process and using a meta-learner to automatically correct labels, we could adaptively obtain rectified soft labels iteratively according to current training problems without manually preset hyper-parameters. A review of deep learning methods for semantic segmentation ... May 01, 2021 · Semantic segmentation of remote sensing imagery has been employed in many applications and is a key research topic for decades. With the success of deep learning methods in the field of computer vision, researchers have made a great effort to transfer their superior performance to the field of remote sensing image analysis. How to make use of "soft" labels in binary classification - Quora If you choose soft prediction, the output of the model would look like: [0.9, 0.1]; and the output from hard prediction would be "0" (the index) or "fraud". The soft prediction gives you more information about the model's confidence in prediction. The higher the value for the predicted class, the more confident and accurate (in general) the GitHub - jveitchmichaelis/deeplabel: A cross-platform desktop image ... For example, Darknet uses an 80-class file, Tensorflow models often use a 91-class file, MobileNet-SSD outputs labels starting from 1, Faster-RCNN outputs labels starting from 0, etc. You can then run inference on a single image (the magic wand icon), or an entire project ( Detection->Run on Project ).

Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Multi-Class Neural Networks: Softmax. Estimated Time: 8 minutes. Recall that logistic regression produces a decimal between 0 and 1.0. For example, a logistic regression output of 0.8 from an email classifier suggests an 80% chance of an email being spam and a 20% chance of it being not spam. Clearly, the sum of the probabilities of an email ... Labelling Images - 15 Best Annotation Tools in 2022 - Folio3AI Blog SentiSight.ai is a web-based platform that can be used for image labeling and developing AI-based image recognition applications. Developed by Neurotechnology, a developer of high-precision algorithms and software based on AI-related technologies, the platform is the outcome of 30+ years of experience in algorithm engineering. Key features include: Learning Soft Labels via Meta Learning The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100. Robust Training of Deep Neural Networks with Noisy Labels by Graph ... 2.1 Deep Neural Networks with Noisy Labels Several deep learning-based methods have been proposed to solve the image classification with the noisy labels. In addition to co-teaching [ 5, , 4 As well as the proposed method, the following approaches utilize a small set of samples with clean labels.

How To Label Data For Semantic Segmentation Deep Learning Models ... Image segmentation deep learning can gather accurate information of such fields that helps to monitor the urbanization and deforestation through images taken from satellites or autonomous flying ... Deep learning model to predict complex stress and strain ... Apr 09, 2021 · In recent years, ML methods, especially deep learning (DL), have revolutionized our perspective of designing materials, modeling physical phenomena, and predicting properties (21–26). DL algorithms developed for computer vision and natural language processing can be used to segment biomedical images ( 27 ), design de novo proteins ( 28 – 30 ... Label smoothing with Keras, TensorFlow, and Deep Learning We will notbe covering the above implementations today and will instead focus on our two label smoothing methods: Method #1uses label smoothing by explicitly updating your labels list in label_smoothing_func.py. Method #2covers label smoothing using your TensorFlow/Keras loss function in label_smoothing_loss.py. Unsupervised deep hashing through learning soft pseudo label for remote ... Moreover, we design a new objective function based on Bayesian theory so that the deep hashing network can be trained by jointly learning the soft pseudo-labels and the local similarity matrix. Extensive experiments on public RS image retrieval datasets demonstrate that SPL-UDH outperforms various state-of-the-art unsupervised hashing methods.

Loss and Loss Functions for Training Deep Learning Neural Networks Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function. Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution.

[2007.05836] Meta Soft Label Generation for Noisy Labels The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion.

Data Labeling Software: Best Tools for Data Labeling - Neptune Tagtog is a data labeling tool for text-based labeling. The labeling process is optimized for text formats and text-based operations, to create specialized datasets for text-based AI. At its core, the tool is a Natural Language Processing (NPL) text annotation tool. It also provides a platform to manage the work of labeling the text manually ...

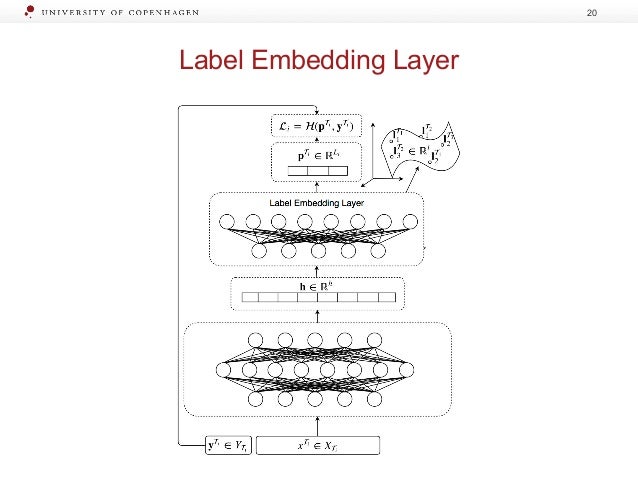

COLAM: Co-Learning of Deep Neural Networks and Soft Labels via ... We study the technical problem of co-learning soft labels and deep neural network during one end-to-end training process in a self-distillation setting. We design two objectives to learn the model and the soft labels respectively, where the two objective functions depend on each other.

A semi-supervised learning approach for soft labeled data Abstract: In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of membership of the training data to the given classes. Often only a small number of labeled data is available while unlabeled data is abundant.

Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning...

Adversarial Attacks and Defenses in Deep Learning Mar 01, 2020 · 1. Introduction. A trillion-fold increase in computation power has popularized the usage of deep learning (DL) for handling a variety of machine learning (ML) tasks, such as image classification , natural language processing , and game theory .

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Post a Comment for "45 soft labels deep learning"